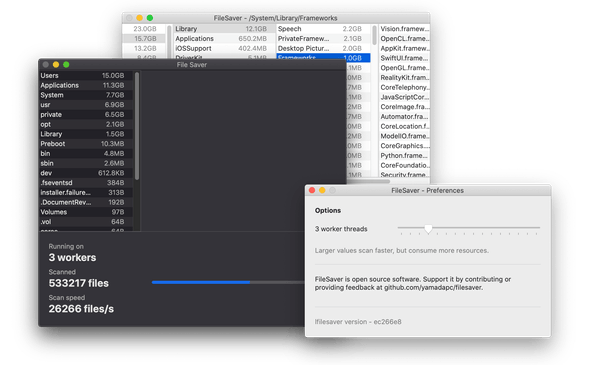

FileSaver - v1.1.1

Fast free and open-source disk usage scanner

FileSaver is now “Parallel Disk Scanner” and is available for sale on the app store.

Since I got my latest MacBook, I can only go for a couple of months before it

starts running out of free disk space. It’s a pretty bad situation where to save

a bit on SSD size, I have to spend my time cleaning-up large files like music

samples, VMs, node_modules and so on.

So then I decided to save a lot of time 😂 and instead of buying one of the available “disk cleaning” solutions, I spent some time trying some open-source software and eventually decided to write FileSaver, a fast multithreaded disk usage scanner.

On my MacBook, scanning 5.3 million files takes 100 seconds, with around 51.000 files/second of throughput. The compromise is high memory usage of around 2GB.

What I used to do with coreutils

I used to do the following command combination to find sizes:

# Find file sizes

du -h ./directory | \

# Sort by size

# (just sort on linux, requires gnu coreutils on OSX)

gsort -h | \

# Put on a pager

lessThis works fine except that:

- It’s slow

- It’s not interactive

duc

I’ve also tried a great alternative to the above, called duc.

duc is a great open-source utility written in C that performs this task a bit

faster and with interactive options. It can also generate an index that can be

re-used, so we don’t need to scan all files when looking for places to clean.

Even so, what I found was that on my filled-up disk, it was really slow to

generate the index for the whole thing, including system directories like

/Library and /usr/local. As a developer, a lot of the trash was around

those.

Parallel size scanning

I profiled duc and found that it’s single threaded nature was a bit

bottleneck. Writes to the index blocked scanning file sizes and the calculations

to aggregate sizes also probably compromised throughput.

I ran over this issue:

Some thoughts about indexing, filtering, parallelization, etc https://github.com/zevv/duc/issues/205

The idea that I could write a multithreaded program and get a massive performance boost stuck with me.

I considered contributing to duc, but it had two major flaws for me:

- It’s GPL licensed

- It’s written in C

The 2nd point is just because, knowing a bit of C++ I didn’t see the point of going to C.

I then designed and iterated on a parallel scanning architecture / aggregation algorithm that achieves pretty good results in terms of throughput.

Problem domain and aggregation overview

The problem we have to solve is that of finding directory sizes based on all their children’s sizes.

Given a certain path to scan, we want to

lstat it. This will give us its size and

file type. If this path is a directory, we then want to scan all its children.

This path will have “completed” once all its children have completed or if

lstat says it isn’t a directory.

So we have to continuously scan children and as they come in, update the parent path’s completed children count (and do this recursively up).

To support better UX and performance, whenever a path is completed, its delta update in size is carried up each of its parents.

For example, imagine we have the following directory structure:

content

└── blog

└── filesaver-freeing-up-space

├── index.md

└── logo.png- We’ll start the scan at

/content- Since it’s a directory we read its children

- Its size and 1 child count is stored

- We do the same at

/content/blogand then/content/blog/filesaver-freeing-up-space - At this point our state looks like:

/content- 1 pending child path/content/blog- 1 pending child path/content/blog/filesaver-freeing-up-space- 2 pending child paths

- We scan

index.md/content/blog/filesaver-freeing-up-space/index.md- is completed + 100KB/content/blog/filesaver-freeing-up-space- 1 pending child paths (+ 100KB)- Other paths’ pending counts are kept, but their total sizes are updated

/content/blog- 1 pending child paths (+ 100KB)/content- 1 pending child paths (+ 100KB)

- We scan

logo.png/content/blog/filesaver-freeing-up-space/logo.png- is completed + 300KB/content/blog/filesaver-freeing-up-space- is completed (+ 300KB)/content/blog- is completed (+ 300KB)/content- is completed (+ 300KB)

Architecture

FileSaver is a simple program, but it’s structured. The architecture is as follows.

Components

- Lock based queue

- Worker Threads

- Reader Thread

- UI Thread

Queue

We pass data between threads using an uninteresting lock based queue, plus one extra lock on the size/pending state, since the UI thread will read it while its being processed.

For this we have filesaver::data::WorkQueue

Worker Threads

The workers work with two queues:

- workQueue provides incoming paths to scan

- resultQueue has entries pushed onto it, with their sizes, types and child paths attached

The worker will enqueue any children it finds.

Reader thread

The reader thread performs the aggregation as described in the aggregation

overview. The state gets stored in a giant hash-map (std::unordered_map)

mapping paths to their sizes and number of pending children.

UI

The UI uses AppKit and is written in Objective-C++. It polls the state for the directories that are visible on the screen.

Distribution

For now, FileSaver is available from its GitHub page. The project has structure that should make it convenient to include it as a library onto a different codebase. Though there could still be improvements for this use-case like organizing public headers and the build system, it goes most of the way and should have good DoxyGen docs.

The code is distributed under the MIT license.