Test Plugin Host

An early VST plugin host to aid quick prototyping of audio plugins written in Rust

Over the last several weeks, I’ve been locked-down at home. A new cluster of the Delta variant has resulted in a lockdown of Sydney, as well as other parts of Australia.

With nothing else to do, I started exploring building audio apps with Rust.

My main goal was to learn and have fun, but I had a specific product in mind driving my exploration, which has now reached a minimally useful version.

All the code I’m writing is MIT licensed and available on GitHub here.

It still needs a lot of polish and testing & I’m just learning as I go, as I’m not a professional audio developer.

However, I want to share its existence with the world and also share some thoughts from my first development attempts.

Plugin Host

The product I had in mind was plugin-host and plugin-host-gui.

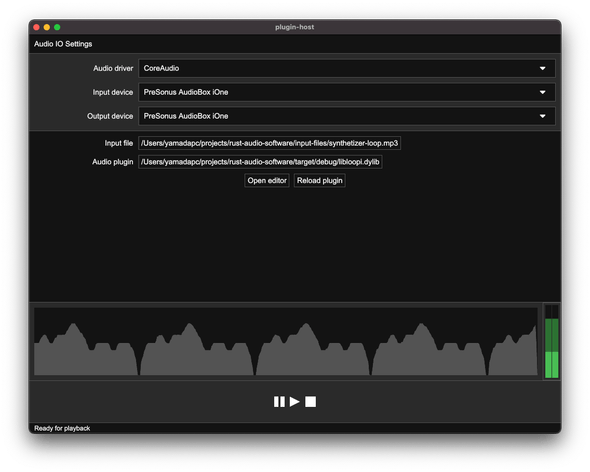

Plugin Host is a testing utility for VST plug-ins.

It comes in two flavours: as a Command-line Tool and as a GUI.

The workflow is as follows:

- Start

plugin-host - Open an input audio file with it

- Open your plugins dylib with it

- Develop your plugin

Plugin host will host your plug-in and loop the audio file over it. Whenever you recompile your dylib, plugin host will reload your VST and its editor and restart audio playback with the new version.

Doing proper “hot-reload” with fade-ins/outs and without restarting the audio-thread may come at some point soon.

Very quick demo

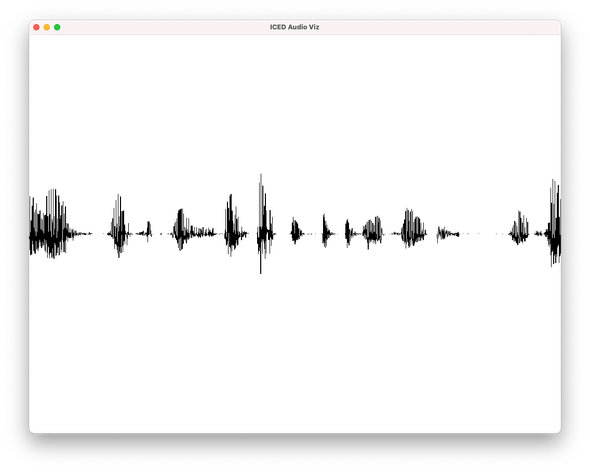

Here’s a 1-minute demo. Imagine I’m working on a new looper VST, and I’m trying to build a nice audio visualization chart.

I started with a blue color, but now I’m not quite sure. So I make some changes and re-compile:

For this small VST, most builds should complete in a couple of seconds, which is an awesome dev-loop for a Rust based plug-in. I could try things out, see how they feel and continue.

More features I’d want to add

Input & Output

For now, the host only supports looping over a file, but I’ll add support for processing normal input soon. Then, one should be able to write some audio-processing code, a GUI, maybe plug their guitar in and see how things feel.

The CLI version of the host supports generating an output .wav file and

outputting some basic performance diagnostics, but that has not been added to

the GUI yet.

Protecting your ears and speakers

It’s quite a bad idea to hot-reload audio-processing code with your speakers turned up as you may damage your ears or speakers depending on what type of mistake you make.

In order to mitigate this, I’d like to add a limiter and similar protections onto the host, so we could iterate without fear.

Visualization

For now, the host only draws a very basic RMS chart at the bottom & a broken (not using dBs) volume indicator. It’d be great to provide more tools that’d be commonly useful for developing audio processors, such as a spectrogram.

Volume control & fixes

This is a basic thing that is missing.

Augmented Audio

I’m pushing these things to the augmented-audio

repository on GitHub.

Ultimately, I’d very much like to have some kind of framework-y selection of solutions for common audio application problems in Rust.

Some examples of “solutions” that need to be selected (or built):

- Testing tools - Such as

plugin-host, but also unit-testing approaches like snapshot testing or property testing over the audio - Reference counting background GC - I’m using a

basedropwrapper to avoid de-allocation on the audio-thread & have a WIP alternative. New things that I think are missing in both: metrics, more rigid scheduling of collection, more tests. - GUI toolkit

- Audio processor abstraction & audio graph implementation

- Stand-alone & multiple plug-in format packaging

- Filters & common effects

- Guidelines of how to handle shared audio state - e.g. “ProcessorHandle” structs?

There’s a ton of amazing work on the RustAudio GitHub organisation which is super helpful for me as well as other framework-y projects such as baseplug.

augmented-audio is my own exploration on this space, leveraging some tooling

from RustAudio & several crates I’m trying to write or port from C++.

Web GUI: Earlier steps

The initial idea for these libraries and for Plugin Host GUI was to explore using Web GUI for the audio apps.

I’ve now deprecated this work and will replace all web bits with native GUI.

Notes on it will continue to live on WEB_GUI.md

and the legacy-web-gui branch of the repository.

However, it’s still an interesting little experiment, as I did get it to work and perform acceptably.

There were two parts of this:

plugin-host-gui- Equivalent to the GUI you saw above, but built using

tauri

- Equivalent to the GUI you saw above, but built using

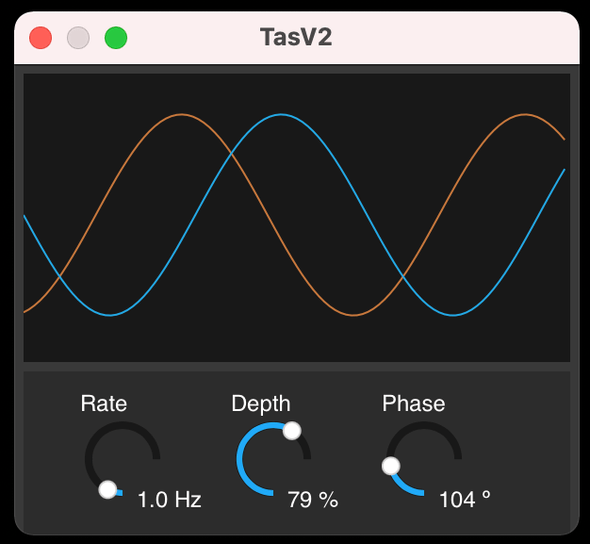

tasv2- A tremolo plug-in built with a React.js front-end hosted on a

WkWebViewand communicating through messages with the Rust audio processing parts

- A tremolo plug-in built with a React.js front-end hosted on a

I won’t discuss the tauri app, though you may check the markdown file above

for notes including how I was trying to get around bottlenecks for the volume

meter.

Web front-ends for VST plug-ins

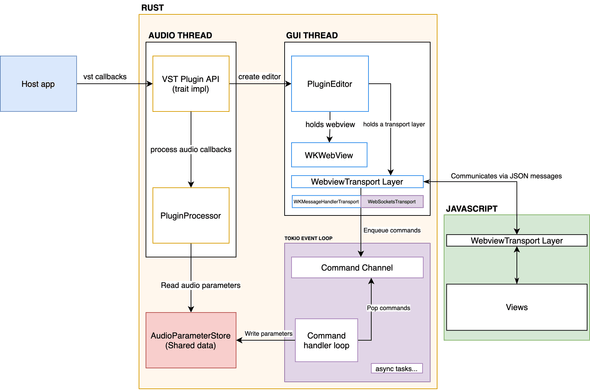

The incomplete web based plug-in idea would work in the following manner.

VST layer

Rust exposes VST APIs for an audio host to call using the rust-vst

crate.

It exposes callbacks to process audio, as well as callbacks to create its “editor”.

For the very simple web plugin prototype I built, a shared AudioParameterStore

object held all parameter states and definitions (value & instructions to render

on the GUI).

pub trait PluginParameterLike {

fn name(&self) -> String;

fn label(&self) -> String;

fn text(&self) -> String;

fn value(&self) -> f32;

fn set_value(&self, value: f32);

fn can_be_automated(&self) -> bool;

fn value_range(&self) -> (f32, f32);

fn value_type(&self) -> ParameterType;

fn value_precision(&self) -> u32;

}

pub type ParameterRef = Arc<dyn PluginParameterLike>;

struct ParameterStoreInner {

parameters: HashMap<ParameterId, ParameterRef>,

parameter_ids: Vec<ParameterId>,

}GUI Thread

The host will call into the VST passing a pointer for the platform-specific view the plugin GUI should attach itself to.

The GenericParametersEditor struct creates a webview and a transport layer to

communicate with it. Internally this uses webview-holder and darwin-webkit

crates which are just low-level wrappers to the WebKit Objective-C API.

Transport layer

In order to exchange messages with JavaScript, there’s an abstract transport

layer. Two WebviewTransport implementations were available.

WkMessageHandlerTransport uses WkScriptMessageHandlers

to exchange messages with JavaScript. This is a webkit API for attaching native

callbacks for JavaScript to post messages into. The ‘Rust to JavaScript’ side is

achieved by evaluating a JavaScript function call that broadcasts messages onto

a global EventEmitter.

WebSocketsTransport uses websockets to send/receive messages into JavaScript.

The reason I implemented both transports is WebSockets is more flexible, since

it allows for the plugin front-end to run in Chrome or over the network. At the

same time, I expected that WkMessageHandlerTransport would be more

efficient.

However, I never benchmarked one transport against the other, so I don’t know if

there are performance benefits from one or another. It’d be interesting to see

if WkMessageHandlers are more efficient or the other way around.

I think, in theory, one benefit of using the native API is one could avoid serialization of the messages and communicate via JavaScript objects. However, in my transport implementation messages are always serialised to JSON.

Another benefit is not having to run a websockets server, which does bring concerns around security and bloat.

JavaScript side

JavaScript has counter-parts to the transport layer which handle the different message transport options.

As mentioned, we may develop the front-end in Google Chrome or, for example, use it as a remote control, but when packaged we may use the native transport.

The tremolo is a super simple front-end written in React.js. It subscribes-to and publishes parameter changes as well as rendering knobs dynamically based on what parameters the Rust side sends to it.

Command handler

When messages are received from JavaScript they’re pushed into a queue. A

tokio task pops messages and actually performs the state updates or broadcast.

The command handler keeps a reference to the parameters-store and handles messages to set parameters as well as broadcasting initial parameter state for the GUI to render when it’s created.

When the React.js front-end is mounted on the DOM, it’ll publish a message signaling it’s ready for which the command handler will respond with definitions of all the knobs it should render.

This might be a bit more complex than it had to, but it allowed for very loosely coupled components (transport is just something that pushes messages onto a channel, command handler is application specific). Even though I won’t be using the web front-end bits for now, the building blocks could be adapted for other use-cases.

Issues & benefits with web front-ends

There are several issues and benefits of web front-ends for VST plug-ins.

Here’s a quick list of some down-sides and comments:

- Much more complexity

- Runtime & build-time infrastructure to support it

- Worse performance

- All messages must be serialized and some rendering tasks are less efficient on the Web

- However, there are several rendering tasks which are very efficient on the web, and it’s a very featured platform

- Worse memory usage?

- Having a completely separate GUI would mean a lot of data is duplicated

- It could be the case comparing with an optimised native GUI that memory

usage is worse with a browser, but it’s not really the case comparing my

simple tremolo with

icedbased plug-ins - The React.js plug-in actually consumes significantly less memory (several

times less) than a similar

icedcounterpart

- Not cross-platform (yet)

- Since I’m just using raw

WkWebViewbindings, this is not cross-platform at all (though once would usetauri’s building blocks if they support the plugin use-case at some point - currently, their libraries require that the event-loop is owned bytao)

- Since I’m just using raw

Overall, the reason I’m not going to continue pushing this side is because I’d

really like to do basic audio-visualisation in the plug-ins, and I’ve found that

iced has quite good performance at doing that & would be able to integrate

onto a custom rendering pipeline if I need to.

I think for plug-ins that do not intend to do audio-visualisation, if there was a cross-platform wrapper supporting event callbacks in/out of JavaScript it could be a potential option.

For a professional application, I’d really consider building on top of the web platform a safer bet than adopting Rust native GUI toolkits, as unfortunate as that may be. As long as the project can survive the short-comings of message passing and the extra complexity, it’d be something to consider.

It really depends on the application and its constraints.

iced and druid

I did a very lousy benchmark of iced and druid drawing the input signal as a

line.

For this, iced could stay with only around 5% CPU usage to render (not at

60fps), while druid saturated the CPU to do a similar thing.

I sure that this may be due to my incorrect usage of druid.

I’m trying to use the widget API to draw charts (and doing it without much

performance considerations). When profiling, most of the time is spent within

CoreGraphics calls, so if drawing back-ends are switchable (I have not checked)

this could potentially be worked around.

Even so, the results of this spike, combined with existing support

from iced_baseview to render

iced apps inside plug-ins led me to pick iced.

iced performance is not 100% free on plugin-host as I had to

change some internal lyon calls to get the plugin-host-gui2 audio chart to

perform better. I’ve taken notes here: https://github.com/yamadapc/augmented-audio/wiki/Volume-Charts---iced-lyon-performance.

Plugin host iced GUI notes

So far I’ve found iced a very nice library to use, it’s very easy to use and

solves several problems out-of-the-box with good documentation and working

examples.

Some things that I’d put on a wish-list:

NOTE

icedis a great open-source project, that I’m sure has been built with care (who knows if not by someone else in lock-down!), and these are things I think need to be solved, but not complaints or demands or anything like that.

- Documentation on how to structure a larger app

- Support for avoiding re-rendering/re-drawing

- Accessibility

- Context menus

- Multiple windows

- Gradients & shadows - I started drafting gradient support here

- High memory usage

Conclusion

Check-out the plugin-host-gui2 source code if you’re interested.

I’ve started a discourse topic here as well for discussion.

Reach out to me on the Rust Audio chat if you’d like information, have feedback

or just want to try these things out. I’m going as @yamadapc there.

I’m also on https://twitter.com/yamadapc.

I’ll try to write some thoughts next on the implementation details of

plugin-host as well as just keep pushing forward with it.

All the best.