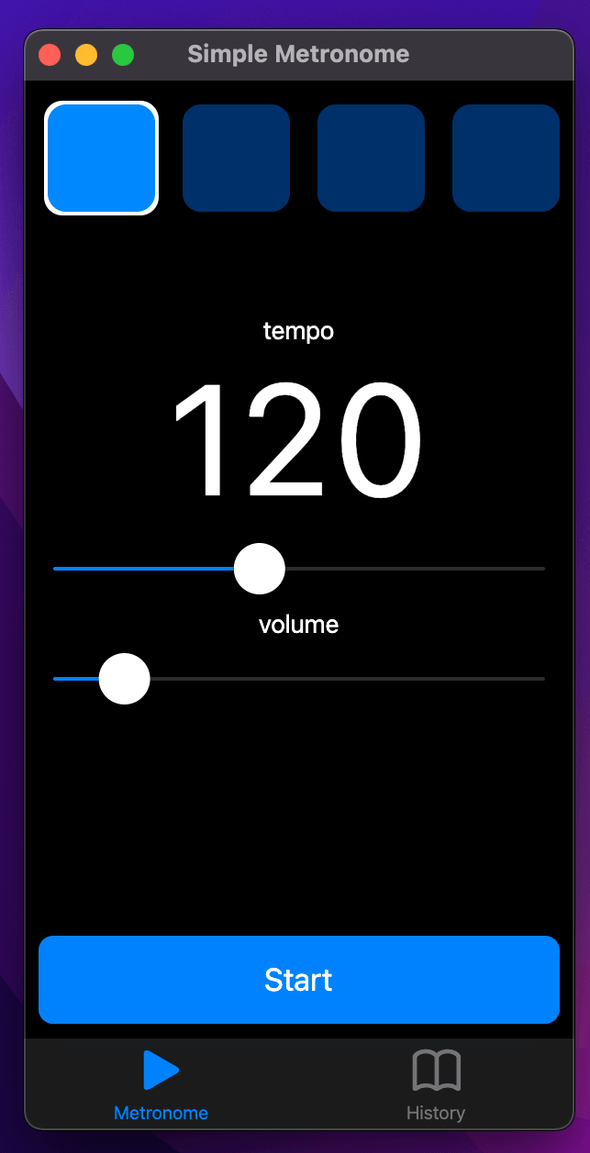

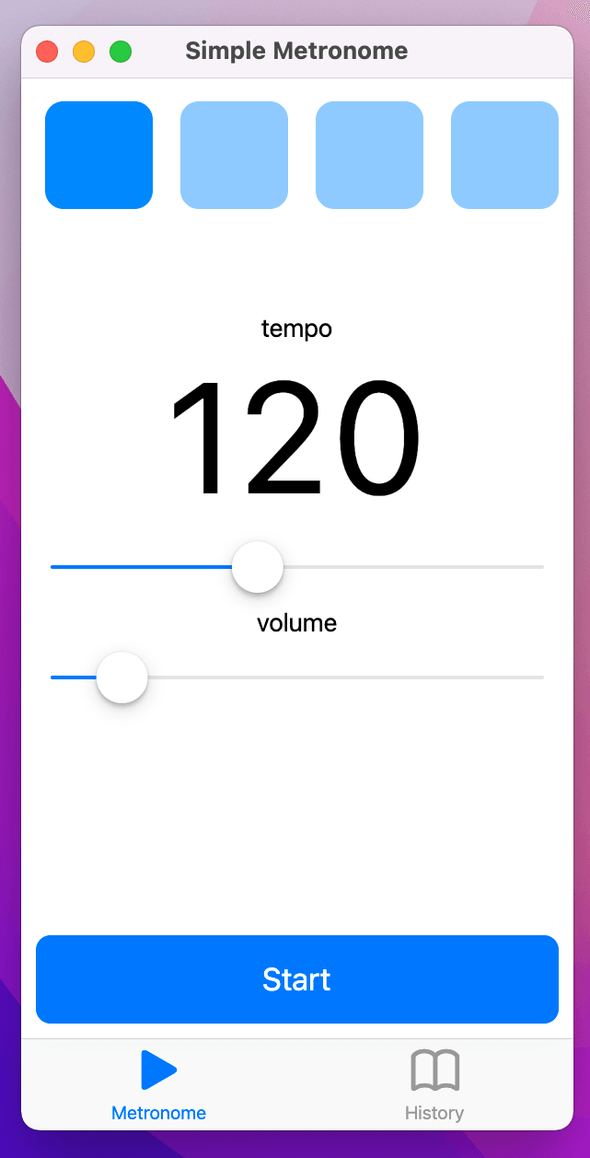

Simple Metronome

Implementing a metronome with Rust and Flutter

On this post, I’ll discuss some work on the Augmented Audio Libraries and particularly go over a “metronome” app written using Rust and Flutter.

Goals primer

Before anything else:

- These are hobby projects

- I’m not a professional audio software developer

- take information with a grain of salt

- any feedback is great

Augmented Audio Libraries

While I would like to go over a metronome, it’s built around the

Augmented Audio Libraries.

They are a set of building blocks for audio development in Rust, building on

top of great tooling from the RustAudio

GitHub organization, such as cpal and

vst-rs.

The initial driver for them was the Test Plugin Host, a VST host for development with support for hot-reload of in-development plugins. You can read about it here: “Test Plugin Host: An early VST plugin host to aid quick prototyping of audio plugins written in Rust”.

I’ve done some explorations with web and native GUI which I walk over above and was now trying out Flutter for the audio application space.

It’s still missing a lot of real-world testing and features, but there are quite a few utilities which could deserve posts of their own.

For example:

- Audio Processors

audio-processor-traits: Traits for declaring audio processorsaudio-processor-standalone: Stand-alone audio processor application generatoraudio-garbage-collector: Background reference counting de-allocation for offloading alloc/de-alloc from the audio thread (wrapper on top ofbasedrop)audio-processor-utility: Gain, pan, mono/stereo audio processorsaudio-processor-file: MP3 input file processor and WAV output file processoraudio-processor-time: Delay effect audio processoraudio-processor-analysis: RMS & FFT analysis processorsaudio-processor-graph: Audio graphs implementation for audio processor traits

- Synthesizer building blocks: Envelope, Filters & Oscillators

- Data structures: Lock-free queue, MIDI, play-head

Most of the repository is MIT licensed, but hasn’t been published into crates.io yet as it’s alpha quality. There are quite a few interesting discussions to tackle in terms of how to build GUIs or how to best represent audio processors (and audio buffers) as abstract objects in Rust.

The Metronome

Simple Metronome is the app above and should be published soon. You can find the entire source code at the metronome subdirectory in augmented audio.

A metronome is a practice aid for musicians. To practice timing, a musician will set a certain BPM on the metronome and start it. It should then produce some kind of sound on every beat the musician can use as a reference. In Simple Metronome this sound is a synthesized beep.

The app is written using Flutter and Rust. The GUI and business logic (state/persistence) are written in Dart and drive the Rust audio back-end.

The Rust side of things exposes methods using flutter_rust_bridge, a high-level codegen solution for Dart/Rust interop. A static library is compiled from the Rust side, exposing functions to dart. The library is linked onto the macOS and iOS apps (and other platforms would work similarly, but haven’t been tested).

Since this is a very simple audio app, only 3 pieces of data need to be communicated between the GUI and Audio Thread:

- tempo parameter (a float)

- current beat (a float)

- volume parameter (a float)

For this, Rust exposes callbacks which set atomic values onto the audio processor handle.

Playing back a Sine Wave with Augmented

Let me show what a sine wave generator looks like using augmented.

First, we declare our audio processor struct.

use augmented_oscillator::Oscillator;

#[derive(Default)]

struct OscillatorProcessor {

oscillator: Oscillator<f32>,

}Then, implement the AudioProcessor trait:

use audio_processor_traits::{AudioProcessor, AudioBuffer, AudioProcessorSettings};

impl AudioProcessor for OscillatorProcessor {

type SampleType = f32;

fn prepare(&mut self, settings: AudioProcessorSettings) {

self.oscillator.set_sample_rate(settings.sample_rate());

}

fn process<BufferType: AudioBuffer<SampleType = Self::SampleType>>(

&mut self,

buffer: &mut BufferType

) {

for frame in buffer.frames_mut() {

let out = self.oscillator.next_sample();

for sample in frame {

*sample = out;

}

}

}

}The AudioProcessor trait requires two methods:

preparewill receive the audio settings such as sample rate, so the processor can set up internal stateprocesswill receive a mutable, generic audio buffer implementation, which allows processing decoupled from sample layout

The 2nd detail is up to discussion. I’ve found that some audio buffer indirection may cause serious performance cost due to missed optimisations, and it might not be worth to have it instead of simple sample slices.

However, some kind of ‘process callback context’ is required, as the processor needs to know the nº of input/output channels.

AudioBuffer::frames_mut returns an iterator of the channel chunks; each frame

is a sample pair (for stereo audio). We simply tick the oscillator struct and

set it as the output.

Main function

Once this is set up, a command-line app can be generated with

audio_processor_standalone.

fn main() {

let processor = OscillatorProcessor::default();

audio_processor_standalone::audio_processor_main(processor);

}The command-line supports both “online” (using your input/output devices) and “offline” (into a file) rendering.

audio-processor-standalone

USAGE:

my-crate [OPTIONS]

FLAGS:

-h, --help Prints help information

-V, --version Prints version information

OPTIONS:

-i, --input-file <INPUT_PATH> An input audio file to process

--midi-input-file <MIDI_INPUT_FILE> If specified, this MIDI file will be passed through the processor

-o, --output-file <OUTPUT_PATH> If specified, will render offline into this file (WAV)It can also read an input audio file and feed it into the processor as well as read an input MIDI file.

In order to process MIDI audio_processor_main_with_midi should be used and the

struct needs to implement the MidiEventHandler trait.

Embedding into an app

On top of a command-line, audio_processor_standalone can also be used to

embed an audio-thread into another app. For this, the standalone_start

function is used. The library will return a set of “handles”, objects that

should be held onto (the audio thread will stop when they are dropped).

Audio Processor Handle Pattern

I found a little pattern for sharing state between GUI and audio thread, which is the “audio processor handle” pattern.

Each AudioProcessor declares its shared state as a handle, which is

thread-safe (uses atomics or other real-time safe synchronization primitives).

This handle uses a shared pointer which is reference counted & de-allocated on a

background thread.

The GUI thread will copy the handle & keep a reference to it, while the audio processor will be moved into the audio thread.

use audio_processor_traits::AtomicF32;

use audio_garbage_collector::{Shared, make_shared};

struct MyProcessor {

handle: Shared<MyProcessorHandle>,

}

struct MyProcessorHandle {

volume: AtomicF32,

}

fn example() {

let processor = MyProcessor {

handle: make_shared(MyProcessorHandle {

volume: AtomicF32::new(0.0)

})

};

let handle = processor.handle.clone();

// now the processor can be moved to the audio thread.

// the handle can be shared between many threads & must be thread safe

}Conclusion

Simple Metronome will soon be available for free on macOS and iOS stores.

Its source code and building blocks will continue to be available, and I intend to write more simple/complex apps in order to stress them.

Please reach out with any feedback, questions or comments on Twitter - @yamadapc.

All the best.