Augmented Audio - Generic AudioProcessors in Rust

Utilities for audio programming, generating GUIs, VSTs and CLIs

This is a February update on Augmented Audio Libraries, my hobby libraries for audio programming in Rust.

Note: Most of this is unpublished to crates.io.

This is 3rd in a series:

- Test Plugin Host: An early VST host to aid prototyping of plugins

- Simple Metronome: Implementing a metronome with Rust and Flutter

Overview

In this post I’ll try to write about some ideas around generating CLIs and GUIs.

I’ll share the AudioProcessor and AudioProcessorHandle traits

and some of audio_processor_standalone usage in conjunction with these.

AudioProcessor: Implementing a BitCrusher

We’d like to implement a bit-crusher, so some sample-rate reduction distortion effect.

I’m using an algorithm I found on the internet, which works by implementing sample-and-hold of the input signal.

The algorithm is:

// src/lib.rs

fn process(

// Given sample_rate as samples per second

sample_rate: f32,

// Given bit_rate as output samples per second

bit_rate: f32,

// Given buffer as the mono input/output buffer of samples

buffer: &mut [f32]

) {

let buffer_size = buffer.len();

// We'll samples every `sample_rate / bit_rate` indexes

let step_size = (sample_rate / bit_rate) as usize;

let mut sample_index = 0;

// For each index in the buffer

while sample_index < buffer_size {

// Take the first sample

let first_sample = *buffer[sample_index];

let limit_index = (sample_index + step_size).min(buffer_size);

// Hold it for `sample_rate / bit_rate` steps

while sample_index < limit_index {

*sample = first_sample;

sample_index += 1;

}

}

}Cool. We have our processor which is super simple, but it has a few minor issues:

- It only supports mono buffers

- Multichannel buffers would need to not be interleaved (where each

multichannel frame happens one after the other with

[l1, r1, l2, r2, l3, r3]) - We can’t hear it

- We can’t play with the parameters on a GUI

- We can’t load it in a DAW and play with it

We’ll address each of these.

Implementing AudioProcessor - Generic buffers

The AudioProcessor trait

aims to provide a way to implement audio-processors

against a general interface.

There are two methods to implement:

prepare- Here we’ll receive audio-settings like sample-rate and prepare our processor for playbackprocess- Here we’ll receive an audio buffer and process it

First let’s make this into a struct and declare some imports we’ll need:

// src/lib.rs

use audio_processor_traits::{AudioProcessor, AudioProcessorSettings, AudioBuffer};

pub struct BitCrusherProcessor {

sample_rate: f32,

bit_rate: f32,

}

impl Default for BitCrusherProcessor {

fn default() -> Self {

Self { sample_rate: 44100.0, bit_rate: 11025.0 }

}

}We’ll store parameters in the struct, so they can be changed externally (this

will change slightly later). Also, we need imports for AudioProcessor,

AudioProcessorSettings and AudioBuffer.

We can now declare the AudioProcessor impl:

impl AudioProcessor for BitCrusherProcessor {

type SampleType = f32;

fn prepare(&mut self, settings: AudioProcessorSettings) {

self.sample_rate = settings.sample_rate();

}

fn process<BufferType: AudioBuffer<SampleType = Self::SampleType>(

&mut self,

data: &mut BufferType

) {

let buffer_size = data.num_samples();

let step_size = (self.sample_rate / self.bit_rate) as usize;

let mut sample_index = 0;

while sample_index < buffer_size {

let first_index = sample_index;

let limit_index = (sample_index + step_size).min(buffer_size);

while sample_index < limit_index {

// **************************************************

// **************************************************

// **************************************************

// HERE - This is the only real change

// **************************************************

for channel_index in 0..data.num_channels() {

let out = *data.get(channel_index, first_index);

data.set(channel_index, sample_index, out);

}

// **************************************************

// **************************************************

// **************************************************

sample_index += 1;

}

}

}

}In our prepare method we use the sample_rate provided, which will match

whatever output device we’ll use this processor with.

On process we receive an AudioBuffer. The AudioBuffer is an abstract

multichannel buffer, and it may be several types.

The audio-processor-traits crate

provides implementations for:

- Interleaved samples

- Non-interleaved

- Helpers for VST interop

Additionally, the SampleType = f32 is declared as part of the processor

specification. If we wanted we could declare both f32 and f64 processors

with generics.

The AudioBuffer provides a handful of methods, here we use get, set,

num_samples and num_channels which should have clear names for what they do.

I’ve found Rust can do better optimisations with iterators, so AudioBuffer

provides frames and frames_mut which is an iterator over the multichannel

frames and will work for different layouts at 0 cost in the cases where one

should benefit from these. They are the preferred method of processing, but in

this case things are simpler with get / set as we want to index into the

buffer.

Processing code has only changed in an inner for loop;

we’ll do the sample-and-hold for each channel in the input buffer.

Listening to our AudioProcessor - Generic CLI

At this point, we can listen to our code. We’ll create an examples directory

and add audio_processor_standalone:

⚠️ Don’t run this without reading on ⚠️

// examples/bitcrusher.rs

use bitcrusher::BitCrusherProcessor;

fn main() {

let processor = BitCrusherProcessor::default();

audio_processor_standalone::audio_processor_main(processor);

}What audio_processor_standalone::audio_processor_main will do is run a little

CLI program with our processor.

If I run this: cargo run --example bitcrusher -- --help, I’ll get the

following:

audio-processor-standalone

USAGE:

bitcrusher [OPTIONS]

FLAGS:

-h, --help Prints help information

-V, --version Prints version information

OPTIONS:

-i, --input-file <INPUT_PATH> An input audio file to process

-o, --output-file <OUTPUT_PATH> If specified, will render offline into this file (WAV)If I run it without options, it’ll run “online” processing on the system’s default audio input/output (this may cause feedback issues)

We also get --input-file and --output-file which we can use to process

audio files offline.

These are two independent methods of running the processor:

- CPAL online rendering

- We can run the processor on default input/output & plug in a guitar if we want

- CLI offline rendering

- We can generate output from input files and run analysis or tests over it

Listening to our AudioProcessor - Generic VST

If you wanted to just plug this into a DAW now you can do it.

Add another examples/bitcrusher_vst.rs:

// examples/bitcrusher_vst.rs

audio_processor_standalone::standalone_vst!(BitCrusherProcessor);examples/bitcrusher_vst should be configured to compile as:

[[example]]

name = "bitcrusher_vst"

crate-type = ["cdylib"]In the augmented-audio repository (this tooling isn’t installable otherwise)

we can generate a proper VST bundle for macOS (the Info.plist and .vst

directory structure) by marking this crate as having a VST example:

[package.metadata.augmented]

vst_examples = ["bitcrusher_vst"]

private = trueAnd then running dev.sh:

./scripts/dev.sh build --crate ./path-to-bitcrusherThis will output a VST under target.

Generic GUI

Now we want a GUI for the processor, so we can twist a knob and change our parameter. In order to do this, we’ll need to introduce an “audio processor handle”.

The audio processor handle is a pattern I’ve introduced which consists of the following idea:

- Our audio processor struct is a mutable stateful object owned by the audio-thread

- If we want a GUI thread, the GUI thread will hold a “handle” for the processor

- The handle will be a shared reference counted struct that uses atomics for thread-safety

Extract parameters into a handle

So first we’ll extract the parameters from processor onto handle:

// src/lib.rs

use audio_garbage_collector::{Shared, make_shared};

use audio_processor_traits::{AtomicF32, AudioBuffer, AudioProcessor, AudioProcessorSettings};

pub struct BitCrusherHandle {

sample_rate: AtomicF32,

bit_rate: AtomicF32,

}

impl Default for BitCrusherHandle {

fn default() -> Self {

Self {

sample_rate: AtomicF32::from(44100.0),

bit_rate: AtomicF32::from(11025.00)

}

}

}

pub struct BitCrusherProcessor {

handle: Shared<BitCrusherHandle>,

}

impl Default for BitCrusherProcessor {

fn default() -> Self {

Self { handle: make_shared(BitCrusherHandle::default()) }

}

}There are 3 parts I want to clarity in this snippet:

audio_processor_traits::AtomicF32

AtomicF32 and AtomicF64 are conveniently exported from

audio_processor_traits. On release builds these compile to the same code as

using normal floats, and they’re an unfortunate need to make rust compiler

happy with changing floats in multiple threads.

Shared

Shared is re-exported from basedrop.

basedrop provides a couple of smart pointers that will not de-allocate on the

current thread. The idea here is that if we drop BitCrusherProcessor on the

audio-thread (in the case of a dynamic processor graph) we’d not want any

de-allocations to happen on the audio-thread.

basedrop will instead push the de-allocation onto a queue.

make_shared

make_shared will create a smart Shared pointer using the default global GC

thread. So

audio_garbage_collector

will start a global GC thread & this

Shared is associated with it. With raw basedrop we’d have to set this up

manually on different places.

Implement a generic handle

I won’t go over the changes required to the process / prepare methods as

these changes are mechanical.

In order to generate a GUI we need to have a way of introspecting into the available parameters at runtime.

This will be done by implementing AudioProcessorHandle. The way to do this is

to create a newtype around our smart-pointer:

// src/generic_handle.rs

use audio_garbage_collector::Shared;

use audio_processor_traits::parameters::{

AudioProcessorHandle, FloatType, ParameterSpec, ParameterType, ParameterValue,

};

use super::BitCrusherHandle;

pub struct BitCrusherHandleRef(Shared<BitCrusherHandle>);

impl BitCrusherHandleRef {

pub fn new(inner: Shared<BitCrusherHandle>) -> Self {

BitCrusherHandleRef(inner)

}

}

impl AudioProcessorHandle for BitCrusherHandleRef {

fn parameter_count(&self) -> usize {

1

}

fn get_parameter_spec(&self, _index: usize) -> ParameterSpec {

ParameterSpec::new(

"Bit rate".into(),

ParameterType::Float(FloatType {

range: (100.0, self.0.sample_rate()),

step: None,

}),

)

}

fn get_parameter(&self, _index: usize) -> Option<ParameterValue> {

Some(ParameterValue::Float {

value: self.0.bit_rate(),

})

}

fn set_parameter(&self, _index: usize, request: ParameterValue) {

if let ParameterValue::Float { value } = request {

self.0.set_bit_rate(value);

}

}

}This is some basic boilerplate that can be generated at some point.

Once we have this, by convention, we’ll declare a generic_handle method on

our processor:

// src/lib.rs

impl BitCrusherProcessor {

pub fn generic_handle(&self) -> impl AudioProcessorHandle {

BitCrusherHandleRef::new(self.handle.clone())

}

}And now we can add a GUI example

// examples/bitcrusher_gui.rs

fn main() {

let handle: AudioProcessorHandleRef = Arc::new(processor.generic_handle());

// This actually starts the audio-thread and audio

let _audio_handles = audio_processor_standalone::audio_processor_start(processor);

// This opens the GUI

audio_processor_standalone::gui::open(handle);

}The VST can also change:

use bitcrusher::BitCrusherProcessor;

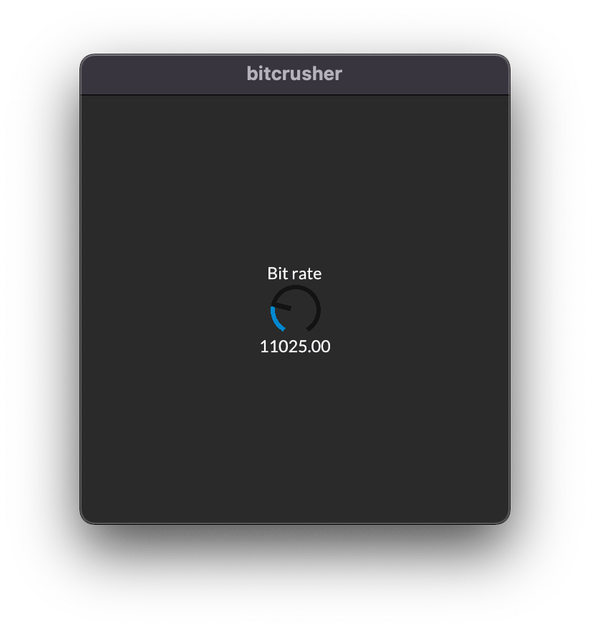

audio_processor_standalone::generic_standalone_vst!(BitCrusherProcessor);With all of this we should get the following on the screen and processing the default input/output device audio:

⚠️ This runs online, control your volume & so on, there may be mistakes above ⚠️

That’s it

For the proper bitcrusher source-code see crates/augmented/audio/audio-processor-bitcrusher

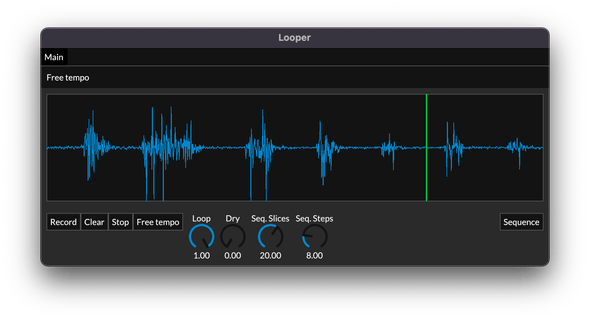

Custom GUI

Custom GUI can be done easily as well and is done for other processors in the repository.

Its GUI looks like this:

All the best